Datical is a tool for implementing DevOps for databases.

DevOps Concepts

While the concept of a develop/test/deploy pipeline is familiar to software development, it is often not a concept that DBAs have needed to be involved with or understand. With Agile software development and build automation becoming part of the day-to-day life of developers, automation and process is increasingly needed for the database as much as it is for code.

A Quick Primer on Release Pipelines

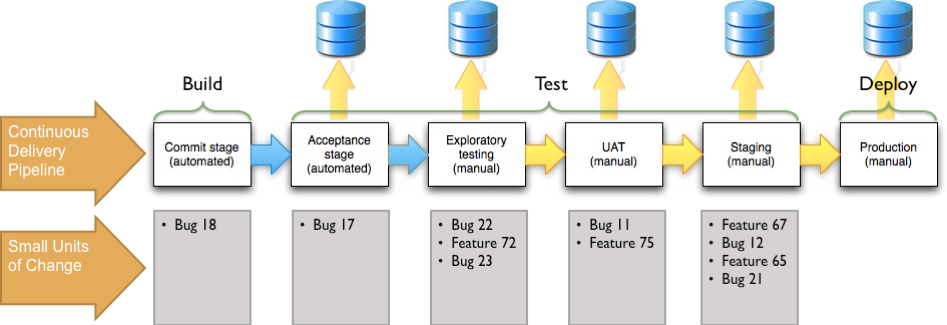

A release pipeline consists of the steps required to build, test and ultimately release completed software bug fixes and features to a production environment. The earliest stages of the pipeline are typically automated. Automation is almost always in place for building the application and performing low-level unit tests. Depending on the maturity of release automation, it can involve several levels of testing before reaching the final stage of production deployment. While all of these steps could be automated, complete automation through the entire process is exceedingly rare. Current practice involves manual testing near the end of the testing pipeline. As a final testing step, it is also common for the original person who requested the bug fix or feature to perform User Acceptance Testing of the software to validate that the implemented changes actually satisfy the original request or requirement. It’s only after layers of testing and acceptance validation that the software is made available for release.

To match the automation occurring with code development, schema changes need to progress with the code so both are ready for testing throughout the pipeline and ultimately available for release. In the picture above, the blue arrows represent automated steps in the process while the yellow arrows are manual. As can be seen, database deployments are almost always manual today. With the increasing build and test automation in place for code, the demand for schema test and deployment automation becomes more critical.

In addition to the single pipeline example, development can occur in multiple, parallel pipelines: one to address issues in the current release and one or more additional pipelines for parallel development of future releases. To support this workflow, multiple deployment pipelines will need to be modeled and managed from the database perspective. This way, changes can be deployed to match the deployment of code. When appropriate, schema changes can be merged into other pipelines to match the branching and merging of code that occurs in multi-pipeline software development.

Moving Database Changes to a DevOps Process

Before adopting DevOps processes, database changes typically are managed manually by database administrators. Developers submit database code for review and manual deployment to the databases associated with the application at each stage in the process.

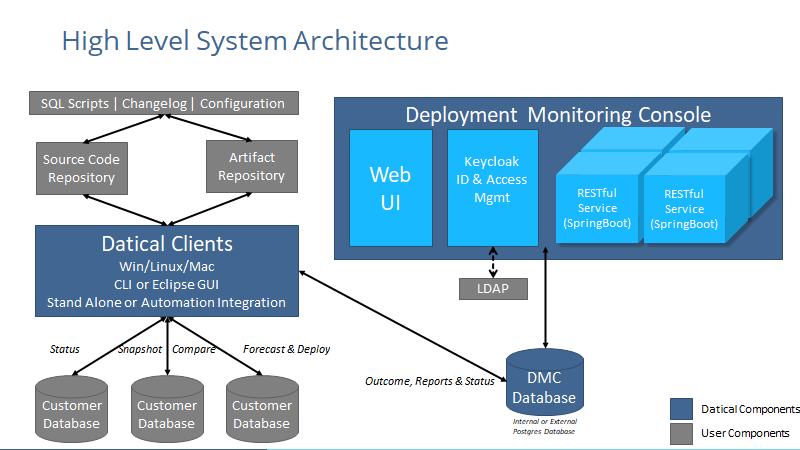

When you transition to using Datical, the database changes move to a model where they are reviewed, packaged, and deployed automatically through the pipeline in parallel with application code changes.

- Create a Datical project to define the workflow for database changes - the stages of the workflow parallel application code stages.

- Check database changes into Source Control Management (SCM) alongside application code.

- Datical tools package the SQL code into changesets and deploy the changes through the workflow

SCM Repositories for Database Code

First, configure a repository for the Datical project. Second, configure a repository for database code. It can be in the existing developer repository, or a new sql script repository exclusively used for database changes.

- Datical projects

- SQL code changes for a project

Configure 3rd party CI automation triggers or polling to start packaging operations automatically.

Datical Project: Defines the Databases for Each Stage and Deployment Order

A datical project (also called a deployment plan) defines the workflow for database changes.

- Database and Schema(s) for each stage

- Name and order of pipelines

- Name and order of stages within each pipeline

- Packaging and Deployment configuration information

Labels and Contexts: Managing the Database Change Workflow

Labels are user-defined text strings created during packaging or later in the deployment process. They are used to group related database changes, selectively deploy groups of changes, track deployed changes, and manage deployment workflow. Labels are automatically applied during packaging, specified by a developer at code check-in time and can also be specified by the DevOps engineer when configuring Datical. The standard labels most often used in a project are the script name, pipeline, and ticket number labels.

Contexts are used to group a subset of environments to deploy to. For example, use the context “Performance” to deploy to the Performance environment.

Automating Deployment Operations

Use automation software (build and release tools) to call Datical to perform these operations on database changes automatically. If you don't have automation in place yet, these operations can also be performed manually using the Datical CLI and/or Datical GUI.

Package SQL Code into Changesets

- Flexible interpretation of SQL

- Targeted handling for each type of SQL

- Apply labels for process tracking and change auditing

Validate SQL Code and Database Changes

- At multiple stages in the pipeline (starting with packaging), Datical checks the changes against rules that you configure. The rules reflect your DB administration practices and guidelines.

Forecast: Simulate and Assess the Changes

- Simulates the changes and compares them to the existing database

- Compliance with rules set by database administration

- Impact on the database - determine whether the changes introduce errors or conflicts

- If the forecast fails, the changes are not deployed!

Deploy: Apply the changes

- Simulate (again)

- Check rules (again)

- Changesets are deployed to the live database for a stage.

Status: Where are the changes?

- A changelog is used to assess each stage in the process. It represents all changes that have been packaged.

- Status shows whether all known changes have been deployed as well as what changes have or have not been deployed.